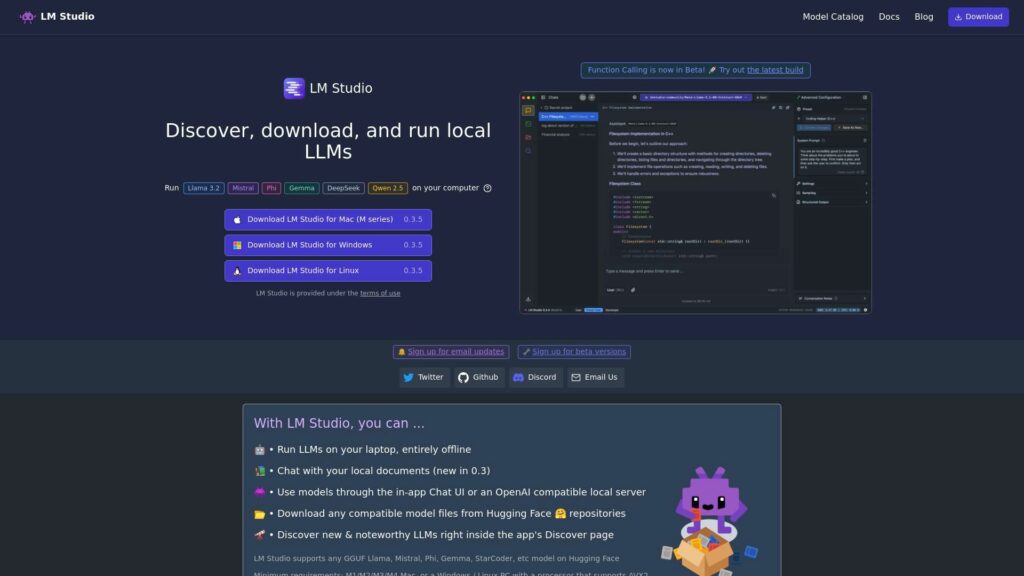

LM Studio is your go-to app for running large language models (LLMs) entirely offline on your laptop or desktop. Whether you're using a Mac with M1/M2/M3/M4 chips or a Windows/Linux PC with AVX2 support, LM Studio empowers you to:

– 🤖 Run LLMs locally for privacy and control.

– 📚 Chat with your local documents (new in version 0.3).

– 👾 Use models via an in-app Chat UI or an OpenAI-compatible local server.

– 📂 Download compatible models directly from Hugging Face repositories.

– 🔭 Explore new and noteworthy LLMs on the app's Discover page.

LM Studio ensures flexibility and performance by supporting GGUF models like Llama, Mistral, StarCoder, etc. It’s also free for personal use and does not collect data—your privacy is guaranteed.

Ready to get started? Visit LM Studio to learn more and unlock the power of offline AI!